Virtual World

"Time to go virtual where the cables meet"

Have you ever had a pet? One that needed looking after when you went on vacation? Yeah, they can be pretty demanding.

It's 2008 and FoodDays has picked up a few school, maybe a dozen now and, hosting at home is becoming a bit of a drag, not least when I want to go on vacation - who will fix the servers when I'm not there?

I friend of mine made me an amazing offer, "Do you want to host your server in my datacenter?" This, I thought was an opportunity not to be missed. However, it was not totally straightforward "Oh, your servers all run on 48v DC right?"

We all know how the globe is criss-crossed with under-sea cables that connect the Internet in different countries together however, have you ever wondered where they go when they get to land? Neither had I until now!

Imagine a town, not too far from here, in New Jersey, an unassuming building, far inland from the sea, safe and secure however, it is a very important building as it is one of only a handful around the country that connects the US to the world. That means that it was to be safe, secure, and able to withstand the worst weather and/or physical attack imaginable.

As it turns out though, once you've built such a grand place and connected it to the Internet, there's not much scope for expansion and, every investor these days wants to see a constant growth in anything that they invest in hence, they hatched a plan to offer datacenter hosting in this most secure place. To get started, they offered it to a select few 'friends and family' to see how it works out, and that was my friend Rich.

I arrived on a Saturday morning with two rack mount servers and a disk array loaded into the back of the car. The address that I was given didn't seem right, was it the back of a shopping mall or just a very dull looking office block, I couldn't tell. If you live in the area I'm sure that you've driven past it and I guarantee that you didn't give it a second look.

At the reception, it just felt like any office block on a Saturday - empty. However, I was issued a badge and keycard and given some simple instructions about where I could go. Once out of the reception area though, it was a different world, and definitely not an office block - the total lack of windows and the metre thick concrete walls were a giveaway that this building was not ordinary.

I met my friend in a hall the size of a football pitch, its oppressive concrete walls and fluorescent lighting endowed it with all the charm of a 50's psychiatric ward from low budget movie. He had access to two padlocked cages, each the size of... well, a room in that psychiatric ward however, these cages included power and fibre internet feeds.

Rich had already set up his servers and we loaded mine into the rack. The servers I had were from SuperMicro and came ready to go, each with remote access cards and loads of redundant capabilities.

Once up and tested ready for my remote access, we were lucky enough to get a tour of the facility. I turned out that our area was originally storage and potentially a replica of the main equipment room. The building is designed to survive the most catastrophic disasters - as infrastructure people, we all like to add redundancy but this building had it in spades! Four power connections from multiple utility companies come into the build, each from four directions from the four corners of the building. The incoming and outgoing connections to the Internet are buried far deeper underground that any construction work in the area might dig and radiate in a dozen directions with no straight lines to avoid discovery. Power in the building was not the usual 120/240/480v AC that you might find in a typical office/factory but instead the common power around in telephone exchanges around the world - 48v DC. This meant that most equipment was powered directly from a room filed with lead-acid batteries, much like a car battery except each one being the size of a wheel-barrow. These batteries could power the facility for some hours and were constantly charged from the utility companies, the power being draw from the cheapest and most available provider at any given moment. In case of failure, the whole facility could be powered from a diesel generator, the engine of which would normally be found powering a ship! Oh, and incase there was an issue starting the generator, there was a backup... and a backup for that so, three enormous diesel generators in total, each able to power the facility with capacity to spare.

Joking, I asked our guide what the uptime target was, he looked at me like he'd never been asked that before, his response: "All the time."

Back home I was able to remote into my servers and set up FoodDays in it's new home, a proper data center... then I took a vacation.

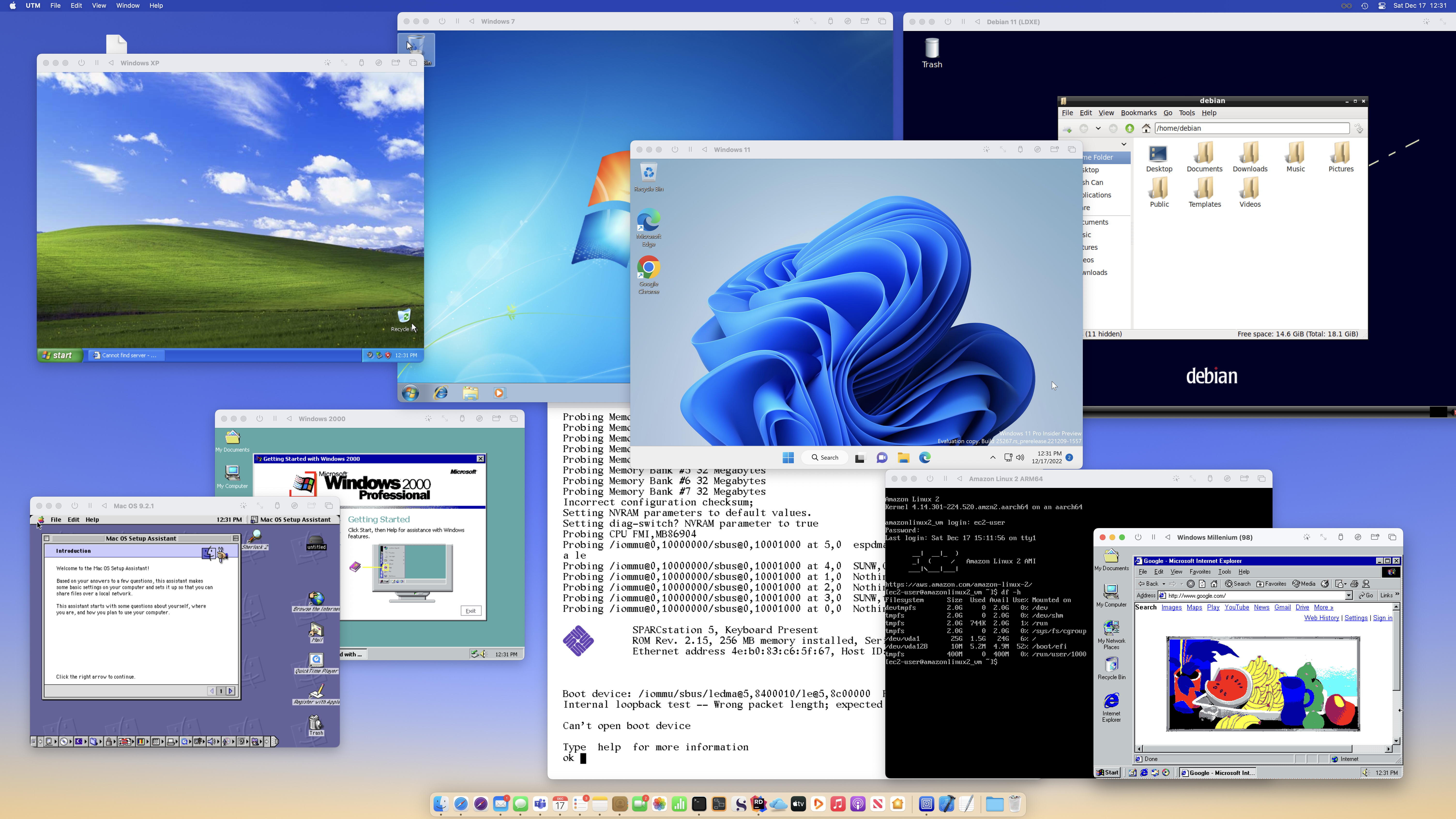

Mac OS 9 (PPC), Amazon Linux 2 ARM, Debian 11 ARM (LDXE), Windows 11 ARM, Solaris 5 (SPARC), Windows 7 x64, Windows XP x86, Windows 2000 x86 & Windows 98/Milenium x86 running on Apple Silicon Mac (ARM64)

Tech deep dive:

I first encountered virtualization in about 1999 with VMWare - probably the first solution we all saw. I remember amazing friends and colleagues running with Windows 98, Windows Millennium and Windows 2000 Workstation (yeah, that's when windows server had a desktop friend) all on the same computer at the same time!

I looked at VMWare for server virtualization but at the time, it was expensive and a new kid on the block was offering a free/small business solution called Citrix XenServer.

XenServer also had a unique feature at the time - Live Motion. What the meant was that you could connect two servers together and to a shared iSCSI storage array with each server being able to access and launch any of the virtual machines on the shared storage. The party trick though was Live Motion - a running virtual machine could be moved from one host to another... while it was running... without a reboot... without any interruption in service! It was which craft but it worked, I could move the DB and web server from only one host then update the idle host, move the VMs back and update the other host.

In my case, I now had a more enterprise grade infrastructure now: two SuperMicro servers, equipped with 48v power supplies coupled to a Promise VTrak storage array, filled with Western Digital disks. The servers were equipped with SuperMicro's remote access technology that allowed me to see the keyboard and mouse over the Internet. That, coupled with the VTrak's web server interface allowed me to do pretty much anything at the machines that could be done locally; that even included wiping and re-installing the entire OS!

Virtualization changed the game. I could now remotely run the infrastructure of FoodDays without downtime. I could start up new web servers running the latest version of the software and transition without interruption as well as test new versions of FoodDays in safety.

The other big difference... The whole infrastructure ran on Linux and that was an even bigger change to come.

Michael Thwaite

Michael Thwaite